Continued Fractions: Haskell, Equational Reasoning, Property Testing, and Rewrite Rules in Action

Overview

In this article, we’ll develop a Haskell library for continued fractions. Continued fractions are a different representation for real numbers, besides the fractions and decimals we all learned about in grade school. In the process, we’ll build correct and performant software using ideas that are central to the Haskell programming language community: equational reasoning, property testing, and term rewriting.

I posted an early version of this article to Reddit, and I’m grateful to those who responded with insight and questions that helped me complete this journey, especially iggybibi and lpsmith.

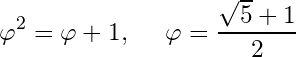

Introduction to continued fractions

Let’s start with a challenge. Assume I know how to write down an integer. Now, how can I write down a real number? In school, we’ve all learned a few answers to this question. The big two are fractions and decimals.

Fractions have simple forms for rational numbers. Just write two integers, for the numerator and denominator. That’s great! But alas, they are no good at all for irrational numbers like π, or the square root of 2. I could, of course, write a fraction that’s close to the irrational number, such as 22/7 as an approximation of π. But this is only good up to a certain limited precision. To get a closer approximation (say, 355/113), I have to throw it away and start over. Sure, I could write an infinite sequence of fractions which converge to the rational number, but every finite prefix of such a sequence is redundant and wasted.

Irrationals are just the limiting case of rational numbers, and very precise rational numbers suffer from this, too. It’s not easy to glance at a fraction like 103993/33102 and get a sense of its value. (Answer: this is another approximation of π.) Or, can you quickly tell whether 5/29 is larger or smaller than 23/128?

Instead, we can turn to decimals. If you’re like me, you don’t think about the mechanics of decimal numbers very often, and conventional notation like 3.14159… really hides what’s going on. I’d like to offer a different perspective by writing it like this:

Here, d₀ is the integer part, and d₁, d₂, d₃, d₄, etc. are called the decimal digits. Notice the symmetry here: after the integer part, a decimal has inside of it another decimal representing its tenths.

This recursive structure leads to the main property that we care about with decimals: we can truncate them. If I have a hundred-digit decimal number, and don’t need that much accuracy, I can simply chop off what I don’t need to get a simpler approximation. This is the same property that lets me write a decimal representation of an irrational number. As long as we accept that infinite sequences exist — and as Haskell programmers, infinite data structures don’t scare us…. much — there is a unique infinite sequence of digits that represents any irrational number. The sequence must be infinite is to be expected, since there are uncountably many irrationals, and any finite representation has only countable values. (Unfortunately, this uniqueness isn’t quite true of rational numbers, since 0.4999… and 0.5 are the same number, for example!)

As nice as that is, though, there are also some significant disadvantages to decimals as a representation:

- Although a few rational numbers have simple decimal representations, almost all rational numbers actually need infinite repeating decimals. Specifically, a rational number whose denominator has any prime factor other than 2 or 5 repeats indefinitely.

- Why 10? This is a question for historians, anthropologists, perhaps biologists, but has no answer within mathematics. It is a choice that matters, too! In a different base, a different subset of the rational numbers will have finite decimals. It’s the choice of base 10 that makes it harder to compute with 1/3. Having to make an arbitrary choice about something that matters so much is unfortunate.

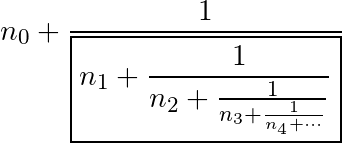

We can make up some ground on these disadvantages with a third representation: continued fractions. A continued fraction is an expression of this form:

Just like with decimals, this gives a representation of a real number as a (possibly finite, or possibly infinite) sequence [n₀, n₁, n₂, n₃, n₄, …]. Now we call the integer parts terms, instead of digits. The first term, n₀, is the integer part. The difference is that when we have a remainder to write, we take its reciprocal and continue the sequence with that.

Note:

- Continued fractions represent all rational numbers as finite sequences of terms, while still accounting for all irrationals using infinite sequences.

- Continued fractions do not depend on an arbitrary choice of base. The reciprocal is the same regardless of how we choose to write numbers.

- Continued fractions, like decimals, can be truncated to produce a rational approximation. Unlike decimals, though, the approximations are optimal in the sense that they are as close as possible to the original number without needing a larger denominator.

With this motivation in mind, let’s see what we can do about using continued fractions to represent real numbers.

Part 1: Types and Values

Time to write some code. As a declarative language that promotes equational reasoning, Haskell is a joy to use for problems like this one.

If you want to see it all together, the code and tests for this part can be found at https://code.world/haskell#PVxpksYe_YAcGw3CNdvEFzw.

To start, we want a new type for continued fractions. A continued fraction is a (finite or infinite) sequence of terms, so we could simply represent it with a Haskell list. However, I find it more readable to define a new type so that we can choose constructors with suggestive names. (It helps with this decision that standard list combinators like map or ++ don’t have obvious interpretations for continued fractions.) I’ve defined the type like this.

data CFrac where

(:+/) :: Integer -> CFrac -> CFrac

Inf :: CFracderiving instance Eq CFrac

deriving instance Show CFracinfixr 5 :+/

Here, x :+/ y means x + 1/y, where x is an integer, and y is another continued fraction (the reciprocal of the remainder). This is the basic building block of continued fractions, as we saw above.

The other constructor may be surprising, though. I’ve defined a special continued fraction representing infinity! Why? We’ll use it for terminating fractions. When there is no remainder, we have x = x + 0 = x + 1/∞, so Inf is the reciprocal of the remainder for a continued fraction that represents an integer. That we can represent ∞ itself as a top-level continued fraction wasn’t the goal, but it doesn’t seem worth dodging. Noticing that an empty list of terms acts like infinity is actually a great intuition for things to come.

There is a simple one-to-one correspondence between continued fractions and their lists of terms, as follows:

- The

:+/operator corresponds to the list cons operator,:. - The

Infvalue corresponds to the empty list,[].

We can define conversions to witness this correspondence between CFrac and term lists.

terms :: CFrac -> [Integer]

terms Inf = []

terms (n :+/ x) = n : terms xfromTerms :: [Integer] -> CFrac

fromTerms = foldr (:+/) Inf

Rational numbers can be converted into continued fractions by following the process described above, and the code is very short.

cfFromFrac :: Integer -> Integer -> CFrac

cfFromFrac _ 0 = Inf

cfFromFrac n d = n `div` d :+/ cfFromFrac d (n `mod` d)cfFromRational :: Rational -> CFrac

cfFromRational r = cfFromFrac (numerator r) (denominator r)

The real fun of continued fractions, though, is that we can represent irrational numbers precisely, as well!

Rational numbers are precisely the solutions to linear equations. The simplest irrational numbers to write as continued fractions are the quadratic irrationals: numbers that are not rational, but are solutions to quadratic equations, and these are precisely the continued fractions with infinitely repeating terms. We can write a function to build these:

cycleTerms :: [Integer] -> CFrac

cycleTerms ns = fix (go ns)

where

go [] x = x

go (t : ts) x = t :+/ go ts xAnd then we can write a quick catalog of easy continued fractions, including some small square roots and the golden ratio.

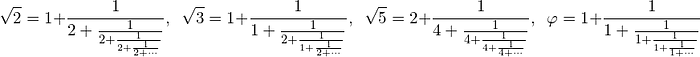

sqrt2 :: CFrac

sqrt2 = 1 :+/ cycleTerms [2]sqrt3 :: CFrac

sqrt3 = 1 :+/ cycleTerms [1, 2]sqrt5 :: CFrac

sqrt5 = 2 :+/ cycleTerms [4]phi :: CFrac

phi = cycleTerms [1]

It’s really worth pausing to think how remarkable it is that these fundamental quantities, whose decimal representations have no obvious patterns at all, are so simple as continued fractions!

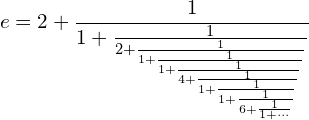

And it doesn’t end there. Euler’s constant e, even though it’s a transcendental number, also has a simple pattern as a continued fraction.

exp1 :: CFrac

exp1 = 2 :+/ fromTerms (concatMap (\n -> [1, 2 * n, 1]) [1 ..])This really strengthens the claim I made earlier, that being based on the reciprocal instead of multiplying by an arbitrary base makes continued fractions somehow less arbitrary than decimals.

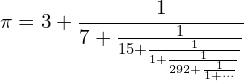

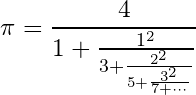

I wish I could tell you that π has a similar nice pattern, but alas, it does not. π has a continued fraction just as random as its representation in other notations, and just as hard to compute to arbitrary precision.

It will be interesting later, though, to look at its first few terms. For that, it’s good enough to use the Double approximation to π from Haskell’s base library. It’s not really π; in fact, it’s a rational number! But it‘s close enough for our purposes. Computing an exact value of π needs some more machinery, which we will develop in the next section.

approxPi :: CFrac

approxPi = cfFromRational (realToFrac (pi :: Double))Canonical forms

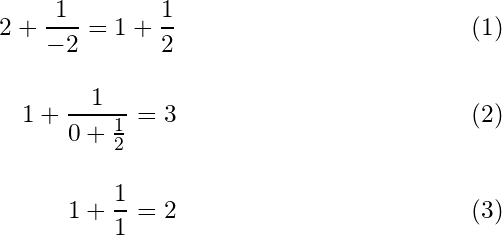

We’ll now return to a more theoretical concern. We want each real number to have a unique continued fraction representation. In fact, I snuck something by you earlier when I derived an Eq instance for CFrac, because that instance is only valid when the type has unique representations. However, this isn’t quite true in general. Here are some examples of continued fractions that are written differently, but have the same value:

Case (1) deals with negative numbers. These will actually cause quite a few problems — not just now, but in convergence properties of algorithms later, too. I have been quietly assuming up to this point that all the numbers we’re dealing with are non-negative. Let’s make that assumption explicit, and require that all continued fraction terms are positive. That takes care of case (1).

This might seem like a terrible restriction. In the end, though, we can recover negative numbers in the same way humans have done so for centuries: by keeping track of a sign, separate from the absolute value. A signed continued fraction type, then, would wrap CFrac and include a Bool field for whether it’s negative. This is entirely straight-forward, and I leave it as an exercise for the interested reader.

Case (2) involves a term with a value of zero. It’s normal for the integer part of a continued fraction to be zero. After that, though, the remaining terms should never be zero, because they are the reciprocals of numbers less than one. So we will impose a second rule that only the first term of a continued fraction may be zero.

Case (3) involves a rational continued fraction with 1 as its final term. It turns out that even after solving cases (1) and (2), every rational number has two distinct continued fractions: one that has a 1 that is not the integer part as its last term, and one that doesn’t. This is analogous to the fact that terminating decimals have two decimal representations, one ending in an infinite sequence of 0s, and the other in an infinite sequence of 9s. In this case, the trailing 1 is longer than it needs to be, so we’ll disallow it, making a third rule that a term sequence can never end in 1, unless that 1 is the integer part.

Subject to these three rules, we get the canonical forms we wanted. It’s unfortunate that non-canonical forms are representable in the CFrac type (i.e., we have failed to make illegal data unrepresentable), but we can do the next best thing: check that our computations all produce canonical values. To do so, let’s write some code to check that a CFrac obeys these rules.

isCanonical :: CFrac -> Bool

isCanonical Inf = True

isCanonical (n :+/ cont) = n >= 0 && isCanonicalCont contisCanonicalCont :: CFrac -> Bool

isCanonicalCont Inf = True

isCanonicalCont (1 :+/ Inf) = False

isCanonicalCont (n :+/ cont) = n > 0 && isCanonicalCont cont

Property testing

One very powerful idea that originated in the Haskell community is property testing. We state a property that we want to verify about our code, and allow a testing framework like to QuickCheck to make up examples and test them. Now is a great time to try this out. Here I’ve defined a few properties around canonical forms. The first is a trivial property that just asserts that we make the right decision about the specific examples above. The second, less trivial, guarantees that our cfFromRational function, the only real semantic function we’ve written, produces canonical forms.

prop_isCanonical_examples :: Bool

prop_isCanonical_examples =

not (isCanonical (2 :+/ (-2) :+/ Inf))

&& isCanonical (1 :+/ 2 :+/ Inf)

&& not (isCanonical (1 :+/ 0 :+/ 2 :+/ Inf))

&& isCanonical (3 :+/ Inf)

&& not (isCanonical (1 :+/ 1 :+/ Inf))

&& isCanonical (2 :+/ Inf)prop_cfFromRational_isCanonical :: NonNegative Rational -> Bool

prop_cfFromRational_isCanonical (NonNegative x) =

isCanonical (cfFromRational x)

(We could try to check that the irrational values are canonical, as well, but alas, we run into non-termination since they are infinite. This will be a theme: we’ll have to be satisfied with checking rational test cases, and relying on the fact that any bugs with irrational values will manifest in some nearby rational value.)

We can now run our code for this section. Try it yourself at https://code.world/haskell#PVxpksYe_YAcGw3CNdvEFzw. In addition to running the tests, we’ll print out our example continued fractions so we can see them.

Testing prop_isCanonical_examples

+++ OK, passed 1 test.Testing prop_cfFromRational_isCanonical

+++ OK, passed 100 tests.3/7 = 0 :+/ (2 :+/ (3 :+/ Inf))

sqrt2 = 1 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2...

sqrt3 = 1 :+/ (1 :+/ (2 :+/ (1 :+/ (2 :+/ (1 :+/ (2 :+/ (1...

sqrt5 = 2 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4...

phi = 1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1...

e = 2 :+/ (1 :+/ (2 :+/ (1 :+/ (1 :+/ (4 :+/ (1 :+/ (1...

approxPi = 3 :+/ (7 :+/ (15 :+/ (1 :+/ (292 :+/ (1 :+/ (1 :+/ (1 :+/

(2 :+/ (1 :+/ (3 :+/ (1 :+/ (14 :+/ (3 :+/ (3 :+/ (2 :+/ (1 :+/

(3 :+/ (3 :+/ (7 :+/ (2 :+/ (1 :+/ (1 :+/ (3 :+/ (2 :+/ (42 :+/

(2 :+/ Inf))))))))))))))))))))))))))

Part 2: Conversions

In the previous section, we converted from rational numbers into continued fractions. We now turn to the inverse conversion: from a continued fraction to a rational number.

The code and tests for this part can be found at https://code.world/haskell#PemataxO6pmYz99dOpaeT9g.

Computing convergents

Not all continued fractions correspond to rational numbers. However, one of the key benefits of the continued fraction representation is that its truncated term sequences represent particularly efficient rational approximations. These rational approximations are called the convergents. It’s not hard to write a simple recursive function to compute the convergents.

naiveConvergents :: CFrac -> [Rational]

naiveConvergents Inf = []

naiveConvergents (n :+/ r) =

fromInteger n :

map (\x -> fromInteger n + 1 / x) (naiveConvergents r)We can also use QuickCheck to write a property test, verifying that a rational number is its own final convergent. This gives a sanity check on our implementation.

prop_naiveConvergents_end_at_Rational ::

NonNegative Rational -> Property

prop_naiveConvergents_end_at_Rational (NonNegative r) =

last (naiveConvergents (cfFromRational r)) === rYou may guess from my choice of name that I am not happy with this implementation. The problem with naiveConvergents is that it recursively maps a lambda over the entire tail of the list. As it recurses deeper into the list, we build up a bunch of nested mapped lambdas, and end up evaluating O(n²) of these lambdas to produce only n convergents.

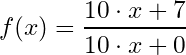

Mobius transformations

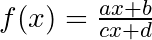

Let’s fix that quadratic slowdown. A more efficient implementation can be obtained by defunctionalizing. The lambdas have the form \x -> fromInteger n + 1 / x. To avoid quadratic work, we need a representation that lets us compose functions of this form into something that can be applied in constant time. What works is mobius transformations.

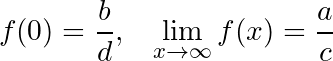

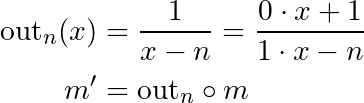

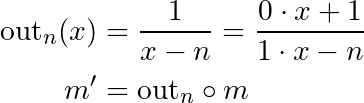

A mobius transformation is a function of the form:

For our purposes, the coefficients a, b, c, and d are always integers. Instead of an opaque lambda, we represent a mobius transformation by its four coefficients.

data Mobius where

Mobius :: Integer -> Integer -> Integer -> Integer -> Mobiusderiving instance Eq Mobius

deriving instance Show Mobius

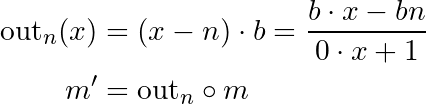

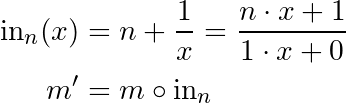

We seek to rewrite our computation of convergents using mobius transformations. First of all, we will restructure it as a left fold, which exposes the mobius transformation itself as an accumulator. We’ll want these building blocks:

- An identity mobius transformation, to initialize the accumulator.

- A composition to combine two mobius transformations into a single one.

In other words, mobius transformations should form a monoid!

instance Semigroup Mobius where

Mobius a1 b1 c1 d1 <> Mobius a2 b2 c2 d2 =

Mobius

(a1 * a2 + b1 * c2)

(a1 * b2 + b1 * d2)

(c1 * a2 + d1 * c2)

(c1 * b2 + d1 * d2)instance Monoid Mobius where

mempty = Mobius 1 0 0 1

There are axioms for monoids: mempty should act like an identity, and <> should be associative. We can test these with property tests.

This is as good a time as any to set up a QuickCheck generator for mobius transformations. To keep things sane, we want to always want to choose mobius transformations that have non-zero denominators, since otherwise the function is undefined on all inputs. We will also want non-negative coefficients in the denominator, since this ensures the transformation is defined (and monotonic, which will matter later on) for all non-negative input values.

instance Arbitrary Mobius where

arbitrary =

suchThat

( Mobius

<$> arbitrary

<*> arbitrary

<*> (getNonNegative <$> arbitrary)

<*> (getNonNegative <$> arbitrary)

)

(\(Mobius _ _ c d) -> max c d > 0) shrink (Mobius a b c d) =

[ Mobius a' b' c' d'

| (a', b', NonNegative c', NonNegative d') <-

shrink (a, b, NonNegative c, NonNegative d),

max c' d' > 0

]

With the generator in place, the tests are trivial to write:

prop_Mobius_id :: Mobius -> Property

prop_Mobius_id m = mempty <> m === m .&&. m <> mempty === mprop_Mobius_assoc :: Mobius -> Mobius -> Mobius -> Property

prop_Mobius_assoc m1 m2 m3 = (m1 <> m2) <> m3 === m1 <> (m2 <> m3)

Faster convergents

Now we return to computing convergents. Here is the improved implementation.

convergents :: CFrac -> [Rational]

convergents = go mempty

where

go m Inf = []

go m (n :+/ x) = mobiusLimit m' : go m' x

where

m' = m <> Mobius n 1 1 0 mobiusLimit (Mobius a _ c _) = a % c

In the expression, go m x, m is a mobius transformation that turns a convergent of x into a convergent for the entire continued fraction. When x is the entire continued fraction, m is the identity function. As we recurse into the continued fraction, we compose transformations onto m so that this remains true. The lambda \x -> fromInteger n + 1 / x from the naive implementation is now defunctionalized into Mobius n 1 1 0.

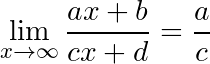

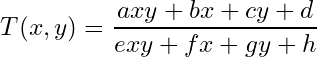

The remaining task is to compute a truncated value of the continued fraction at each step. Recall that a terminating continued fraction is one which has an infinite term. To determine this truncated value, then, we want to consider the limit of the accumulated mobius transformation as its input tends to infinity. This is:

and that completes the implementation. A quick test helps us to be confident in the result.

prop_convergents_matches_naive :: NonNegative Rational -> Property

prop_convergents_matches_naive (NonNegative r) =

convergents (cfFromRational r)

=== naiveConvergents (cfFromRational r)Converting to decimals

Let’s also consider the conversion from continued fractions to decimals. This time, both representations can be approximated by just truncating, so instead of producing a sequence of approximations as we did with rational numbers, we will just a single decimal that lazily adds to the representation with more precision.

Mobius transformations are still a good tool for this job, but we need to refine our earlier observations. Consider the general form of a mobius transformation again:

It’s worth taking the time now to play around with different choices for a, b, c, and d, and what they do to the function. You can do so with the Desmos link below:

We previously noted one of these bounds, and now add the other:

As long as c and d are positive (remember when we agreed to keep them so?), f is monotonic on the entire interval [0, ∞), so f is bounded by these two fractions. In particular, if a/c and b/d have the same integer part, then we know this is the integer part of the result of f, regardless of the

(always non-negative) value of x. We can express this insight as a function:

mobiusIntPart :: Mobius -> Maybe Integer

mobiusIntPart (Mobius a b c d)

| c /= 0 && d /= 0 && n1 == n2 = Just n1

| otherwise = Nothing

where

n1 = a `div` c

n2 = b `div` dWe can now proceed in a manner similar to the computation of convergents. We maintain a mobius transformation which maps the remaining continued fraction to the remaining decimal. Initially, that is the identity. But this time, instead of blindly emitting an approximation at each step, we make a choice:

- If the mobius transformation is bounded to guarantee the integer part of its result, then we can produce that decimal digit. We then update the transformation m to yield the remaining decimal places after that. This will tend to widen its bounds.

- Otherwise, we will pop off the integer part of the continued fraction, and update the transformation to expect the remaining continued fraction terms after that. This will tend to narrow its bounds, so we’ll be closer to producing a new decimal digit.

- If we ever end up with the zero transformation (that is, a and b are both zero), then all remaining decimal places will be zero, so we can stop. If we encounter an infinite term of input, though, we still need to continue, but we can update b and d to match a and c, narrowing those bounds to a single point to indicate that we now know the exact value of the input.

Here is the implementation:

cfToBase :: Integer -> CFrac -> [Integer]

cfToBase base = go mempty

where

go (Mobius 0 0 _ _) _ = []

go m x

| Just n <- mobiusIntPart m,

let m' = Mobius base (-base * n) 0 1 <> m

= n : go m' x

go m (n :+/ x) = go (m <> Mobius n 1 1 0) x

go (Mobius a _ c _) Inf = go (Mobius a a c c) InfcfToDecimal :: CFrac -> String

cfToDecimal Inf = "Inf"

cfToDecimal x = case cfToBase 10 x of

[] -> "0.0"

[z] -> show z ++ ".0"

(z : digits) -> show z ++ "." ++ concatMap show digits

To test this, we will compare the result to standard Haskell output. However, Haskell’s typical Show instances for fractional types are too complex, producing output like 1.2e6 that we don’t want to match. The Numeric module has the simpler functionality we want. There are some discrepancies due to rounding error, as well, so the test will ignore differences in the last digit of output from the built-in types.

prop_cfToDecimal_matchesRational :: NonNegative Rational -> Property

prop_cfToDecimal_matchesRational (NonNegative r) =

take n decFromRat === take n decFromCF

where

decFromRat = showFFloat Nothing (realToFrac r :: Double) ""

decFromCF = cfToDecimal (cfFromRational r)

n = max 10 (length decFromRat - 1)Generalized Continued Fractions

Earlier, we settled for only an approximation of π, and I mentioned that π doesn’t have a nice pattern as a continued fraction. That’s true, but it’s not the whole story. If we relax the definition of a continued fraction just a bit, there are several known expressions for π as a generalized continued fraction. The key is to allow numerators other than 1.

Here’s one that’s fairly nice:

Generalized continued fractions don’t have many of the same nice properties that standard continued fractions do. They are not unique, and sometimes converge very slowly (or not at all!), yielding poor rational approximations when truncated. But we can compute with them using mobius transformations, using the same algorithms from above. In particular, we can use the same tools as above to convert from a generalized continued fraction to a standard one.

First, we’ll define a type for generalized continued fractions.

data GCFrac where

(:+#/) :: (Integer, Integer) -> GCFrac -> GCFrac

GInf :: GCFracderiving instance Show GCFrac

This time I haven’t defined an Eq instance, because representations are not unique. Converting from a standard to a generalized continued fraction is trivial.

cfToGCFrac :: CFrac -> GCFrac

cfToGCFrac Inf = GInf

cfToGCFrac (n :+/ x) = (n, 1) :+#/ cfToGCFrac xThe conversion in the other direction, though, requires a mobius-like algorithm.

gcfToCFrac :: GCFrac -> CFrac

gcfToCFrac = go mempty

where

go (Mobius a _ c _) GInf = cfFromFrac a c

go m gcf@((int, numer) :+#/ denom)

| Just n <- mobiusIntPart m =

n :+/ go (Mobius 0 1 1 (- n) <> m) gcf

| otherwise = go (m <> Mobius int numer 1 0) denomWe can write a property test to verify that, at least, this gives a correct round trip from continued fractions to generalized, and back to standard again.

prop_GCFrac_roundtrip :: NonNegative Rational -> Property

prop_GCFrac_roundtrip (NonNegative r) =

gcfToCFrac (cfToGCFrac x) === x

where

x = cfFromRational rHere’s the definition of π above, expressed in code, and converted to a continued fraction. I’ve written a test to verify that it mostly agrees with the approximate value we defined earlier, just as a sanity check on the implementation.

gcfPi = (0, 4) :+#/ go 1

where

go i = (2 * i - 1, i ^ 2) :+#/ go (i + 1)exactPi = gcfToCFrac gcfPiprop_correct_pi :: Property

prop_correct_pi =

take 17 (cfToDecimal approxPi)

=== take 17 (cfToDecimal exactPi)

Results

We now have built enough to get some interesting results. For example, we have exact representations several irrational numbers, and we can use those to obtain both rational approximations and long decimal representations of each.

In addition to running our tests, we will print the continued fraction terms, convergents, and decimal representation of each of our test values. Check out the full code at https://code.world/haskell#PemataxO6pmYz99dOpaeT9g.

Testing prop_naiveConvergents_end_at_Rational

+++ OK, passed 100 tests.Testing prop_Mobius_id

+++ OK, passed 100 tests.Testing prop_Mobius_assoc

+++ OK, passed 100 tests.Testing prop_convergents_matches_naive

+++ OK, passed 100 tests.Testing prop_cfToDecimal_matchesRational

+++ OK, passed 100 tests.Testing prop_GCFrac_roundtrip

+++ OK, passed 100 tests.Testing prop_correct_pi

+++ OK, passed 1 test.3/7:

- terms: 0 :+/ (2 :+/ (3 :+/ Inf))

- frac : [0 % 1,1 % 2,3 % 7]

- dec : 0.428571428571428571428571428571428571428571428571sqrt2:

- terms: 1 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2 :+/ (2

- frac : [1 % 1,3 % 2,7 % 5,17 % 12,41 % 29,99 % 70,239 % 1

- dec : 1.414213562373095048801688724209698078569671875376sqrt3:

- terms: 1 :+/ (1 :+/ (2 :+/ (1 :+/ (2 :+/ (1 :+/ (2 :+/ (1

- frac : [1 % 1,2 % 1,5 % 3,7 % 4,19 % 11,26 % 15,71 % 41,9

- dec : 1.732050807568877293527446341505872366942805253810sqrt5:

- terms: 2 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4 :+/ (4

- frac : [2 % 1,9 % 4,38 % 17,161 % 72,682 % 305,2889 % 129

- dec : 2.236067977499789696409173668731276235440618359611phi:

- terms: 1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1 :+/ (1

- frac : [1 % 1,2 % 1,3 % 2,5 % 3,8 % 5,13 % 8,21 % 13,34 %

- dec : 1.618033988749894848204586834365638117720309179805e:

- terms: 2 :+/ (1 :+/ (2 :+/ (1 :+/ (1 :+/ (4 :+/ (1 :+/ (1

- frac : [2 % 1,3 % 1,8 % 3,11 % 4,19 % 7,87 % 32,106 % 39,

- dec : 2.718281828459045235360287471352662497757247093699approxPi:

- terms: 3 :+/ (7 :+/ (15 :+/ (1 :+/ (292 :+/ (1 :+/ (1 :+/

- frac : [3 % 1,22 % 7,333 % 106,355 % 113,103993 % 33102,1

- dec : 3.141592653589793115997963468544185161590576171875exactPi:

- terms: 3 :+/ (7 :+/ (15 :+/ (1 :+/ (292 :+/ (1 :+/ (1 :+/

- frac : [3 % 1,22 % 7,333 % 106,355 % 113,103993 % 33102,1

- dec : 3.141592653589793238462643383279502884197169399375

Most of these are exact irrational values, so we can only look at a finite prefix of the convergents, which go on forever getting more and more accurate (at the expense of larger denominators). I’ve chopped them off at 50 characters, so that they comfortably fit on the screen, but you can change that to see as much of the value as you like.

With this data in front of us, we can learn more about continued fractions and how they behave.

Did you notice that the convergents of π jump from 355/113 all the way to 103993/33102? That’s because 355/113 is a remarkably good approximation of π. You have to jump to a much larger denominator to get a better approximation. You can observe this same fact directly from the continued fraction terms. The fifth term is 292, which is unusually large. 292 is the next term after the truncation that yields the convergent 355/113. Large terms like that in a continued fraction indicate unusually good rational approximations.

Conversely, what if there are no large continued fraction terms? More concretely, what can we say about the number that has all 1s as its continued fraction? That’s the golden ratio! What this means is that there are no particularly good rational approximations to the golden ratio. And you can see that, and hear it!

- Flowers tend to grow new petals or seeds at an angle determined by the golden ratio, specifically because there are no small intervals (i.e., small denominators) after which they will overlap.

- Musical notes sound harmonic when their frequencies are close to simple rational numbers. The least harmonic frequencies are those related by the golden ratio, and you can hear it!

(By the way, did you notice the fibonacci numbers in the convergents of the golden ratio? That’s a whole other topic I won’t get into here.)

Part 3: Arithmetic

Now that we’ve got a nice type and some conversions, let’s turn our attention to computing with continued fractions.

The code and tests for this section can be found at https://code.world/haskell#P_T4CkfWGu3bgSwFV0R04Sg.

Simple computations

There are a few computations that are easy, so let’s warm up with those.

Computing the reciprocal of a continued fraction turns out to be almost trivial, because continued fractions are built out of reciprocals! All we need to do for this one is shift all the terms by one… in either direction, really. Since our normal form only allows zero as the first term, we’ll make the decision based on whether the whole part is already zero. We also need a special case for 1 to prevent building a non-normal representation.

cfRecip :: CFrac -> CFrac

cfRecip (0 :+/ x) = x

cfRecip (1 :+/ Inf) = 1 :+/ Inf

cfRecip x = 0 :+/ xWe will test a few expected properties:

- The reciprocal is self-inverse.

- The result matches taking a reciprocal in the rationals.

- The reciprocal always gives an answer in normal form.

prop_recipRecip_is_id :: NonNegative Rational -> Property

prop_recipRecip_is_id (NonNegative r) =

r /= 0 ==> cfRecip (cfRecip (cfFromRational r))

=== cfFromRational rprop_cfRecip_matches_Rational :: NonNegative Rational -> Property

prop_cfRecip_matches_Rational (NonNegative r) =

r /= 0 ==> cfFromRational (recip r) === cfRecip (cfFromRational r)prop_cfRecip_isCanonical :: NonNegative Rational -> Property

prop_cfRecip_isCanonical (NonNegative r) =

r /= 0 ==> isCanonical (cfRecip (cfFromRational r))

Comparing two continued fractions is also not too difficult. It’s similar to comparing decimals, in that you look down the sequence for the first position where they differ, and that determines the result. However, since we take reciprocals at each term of a continued fraction, we’ll need to flip the result at each term.

cfCompare :: CFrac -> CFrac -> Ordering

cfCompare Inf Inf = EQ

cfCompare _ Inf = LT

cfCompare Inf _ = GT

cfCompare (a :+/ a') (b :+/ b') = case compare a b of

EQ -> cfCompare b' a'

r -> rLet’s write a QuickCheck property to verify that the result matches the Ord instance for Rational, and then define an Ord instance of our own.

prop_cfCompare_matches_Rational ::

NonNegative Rational -> NonNegative Rational -> Property

prop_cfCompare_matches_Rational (NonNegative x) (NonNegative y) =

compare x y === cfCompare (cfFromRational x) (cfFromRational y)instance Ord CFrac where

compare = cfCompare

Arithmetic with rational numbers

To make any progress on the rest of basic arithmetic, we must return to our trusty old hammer: the mobius transformation. Recall that we’ve used the mobius transformation in two ways so far:

- To produce convergents, we produced mobius transformations from terms, composed them together, and also produced a new approximation at each step.

- To produce decimals, we looked at the bounds on the result of a mobius transformation, and proceeded in one of two ways: produce a bit of output, and then add a new mobius transformation to the output side; or consume a term of input, and then add a new mobius transformation to the input side.

We will now implement mobius transformations that act on continued fractions and produce new continued fractions. The strategy is the same as it was when producing decimals, but modified to produce continued fraction terms as output instead of decimal digits.

That leads to this implementation.

cfMobius :: Mobius -> CFrac -> CFrac

cfMobius (Mobius a _ c _) Inf = cfFromFrac a c

cfMobius (Mobius _ _ 0 0) _ = Inf

cfMobius m x

| Just n <- mobiusIntPart m =

n :+/ cfMobius (Mobius 0 1 1 (- n) <> m) x

cfMobius m (n :+/ x) = cfMobius (m <> Mobius n 1 1 0) xThere are two properties to test here. The first is that computations on continued fractions match the same computations on rational numbers. To implement this test, we’ll need an implementation of mobius transformations on rational numbers. Then we’ll test that cfMobius gives results in their canonical form. For both tests, we don’t care about transformations whose rational results are negative, as we will just agree not to evaluate them.

mobius :: (Eq a, Fractional a) => Mobius -> a -> Maybe a

mobius (Mobius a b c d) x

| q == 0 = Nothing

| otherwise = Just (p / q)

where

p = fromInteger a * x + fromInteger b

q = fromInteger c * x + fromInteger dprop_cfMobius_matches_Rational ::

Mobius -> NonNegative Rational -> Property

prop_cfMobius_matches_Rational m (NonNegative r) =

case mobius m r of

Just x

| x >= 0 ->

cfMobius m (cfFromRational r) === cfFromRational x

_ -> discardprop_cfMobius_isCanonical ::

Mobius -> NonNegative Rational -> Property

prop_cfMobius_isCanonical m (NonNegative r) =

case mobius m r of

Just rat

| rat >= 0 ->

let x = cfMobius m (cfFromRational r)

in counterexample (show x) (isCanonical x)

_ -> discard

The punchline here is that, using an appropriate mobius transformation, we can perform any of the four arithmetic functions — addition, subtraction, multiplication, or division — involving one continuous fraction and one rational number.

Binary operations on continued fractions

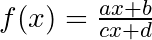

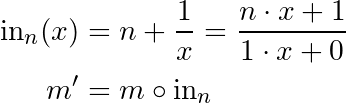

This was all sort of a warm-up, though, for the big guns: binary operations on two continued fractions, yielding a new continued fraction. Here, for the first time, our trusted mobius transformations fail us. Indeed, this remained an unsolved problem until 1972, when Bill Gosper devised a solution. That solution uses functions of the form:

Again, for our purposes, all coefficients will be integers, and the coefficients in the denominator will always be positive. Since these are the generalization of mobius transformations to binary operations, we can call them the bimobius transformations.

data Bimobius where

BM ::

Integer ->

Integer ->

Integer ->

Integer ->

Integer ->

Integer ->

Integer ->

Integer ->

Bimobiusderiving instance Eq Bimobius

deriving instance Show Bimobius

The rest of the implementation nearly writes itself! We simply want to follow the same old strategy. We keep a bimobius transformation that computes the rest of the output, given the rest of the inputs. At each step, we emit more output if possible, and otherwise consume a term from one of two inputs. As soon as either input is exhausted (if, indeed that happens), the remaining calculation reduces to a simple mobius transformation of the remaining argument.

First, we need an analogue of mobiusIntPart, which was how we determined whether an output was available.

bimobiusIntPart :: Bimobius -> Maybe Integer

bimobiusIntPart (BM a b c d e f g h)

| e /= 0 && f /= 0 && g /= 0 && h /= 0

&& n2 == n1

&& n3 == n1

&& n4 == n1 =

Just n1

| otherwise = Nothing

where

n1 = a `div` e

n2 = b `div` f

n3 = c `div` g

n4 = d `div` hThe other building block we used to implement mobius transformations was composition with the Monoid instance. Unfortunately, that’s not so simple, since bimobius transformations have two inputs. There are now three composition forms. First, we can compose the mobius transformation on the left, consuming the output of the bimobius:

(<>||) :: Mobius -> Bimobius -> Bimobius

-- (mob <>|| bimob) x y = mob (bimob x y)

Mobius a1 b1 c1 d1 <>|| BM a2 b2 c2 d2 e2 f2 g2 h2 =

BM a b c d e f g h

where

a = a1 * a2 + b1 * e2

b = a1 * b2 + b1 * f2

c = a1 * c2 + b1 * g2

d = a1 * d2 + b1 * h2

e = c1 * a2 + d1 * e2

f = c1 * b2 + d1 * f2

g = c1 * c2 + d1 * g2

h = c1 * d2 + d1 * h2We can also compose it on the right side, transforming either the first or second argument of the bimobius.

(||<) :: Bimobius -> Mobius -> Bimobius

-- (bimob ||< mob) x y = bimob (mob x) y

BM a1 b1 c1 d1 e1 f1 g1 h1 ||< Mobius a2 b2 c2 d2 =

BM a b c d e f g h

where

a = a1 * a2 + c1 * c2

b = b1 * a2 + d1 * c2

c = a1 * b2 + c1 * d2

d = b1 * b2 + d1 * d2

e = e1 * a2 + g1 * c2

f = f1 * a2 + h1 * c2

g = e1 * b2 + g1 * d2

h = f1 * b2 + h1 * d2(||>) :: Bimobius -> Mobius -> Bimobius

-- (bimob ||> mob) x y = bimob x (mob y)

BM a1 b1 c1 d1 e1 f1 g1 h1 ||> Mobius a2 b2 c2 d2 =

BM a b c d e f g h

where

a = a1 * a2 + b1 * c2

b = a1 * b2 + b1 * d2

c = c1 * a2 + d1 * c2

d = c1 * b2 + d1 * d2

e = e1 * a2 + f1 * c2

f = e1 * b2 + f1 * d2

g = g1 * a2 + h1 * c2

h = g1 * b2 + h1 * d2

That’s a truly dizzying bunch of coefficients! I’m definitely not satisfied without property tests to ensure they match the intended meanings. To do that, we need two pieces of test code.

First, we want to generate random bimobius transformations with an Arbitrary instance. As with mobius transformations, the instance will guarantee that the denominators are non-zero and have non-negative coefficients.

instance Arbitrary Bimobius where

arbitrary =

suchThat

( BM

<$> arbitrary

<*> arbitrary

<*> arbitrary

<*> arbitrary

<*> (getNonNegative <$> arbitrary)

<*> (getNonNegative <$> arbitrary)

<*> (getNonNegative <$> arbitrary)

<*> (getNonNegative <$> arbitrary)

)

(\(BM _ _ _ _ e f g h) -> maximum [e, f, g, h] > 0) shrink (BM a b c d e f g h) =

[ BM a' b' c' d' e' f' g' h'

| ( a',

b',

c',

d',

NonNegative e',

NonNegative f',

NonNegative g',

NonNegative h'

) <-

shrink

( a,

b,

c,

d,

NonNegative e,

NonNegative f,

NonNegative g,

NonNegative h

),

maximum [e', f', g', h'] > 0

]

And second, we want a simple and obviously correct base implementation of the bimobius transformation to compare with.

bimobius :: (Eq a, Fractional a) => Bimobius -> a -> a -> Maybe a

bimobius (BM a b c d e f g h) x y

| q == 0 = Nothing

| otherwise = Just (p / q)

where

p =

fromInteger a * x * y

+ fromInteger b * x

+ fromInteger c * y

+ fromInteger d

q =

fromInteger e * x * y

+ fromInteger f * x

+ fromInteger g * y

+ fromInteger hWith this in mind, we’ll check each of the three composition operators performs as expected on rational number, at least. There’s one slight wrinkle: when composing the two functions manually leads to an undefined result, the composition operator sometimes cancels out the singularity and produces an answer. I’m willing to live with that, so I discard cases where the original result is undefined.

prop_mob_o_bimob ::

Mobius -> Bimobius -> Rational -> Rational -> Property

prop_mob_o_bimob mob bimob r1 r2 =

case mobius mob =<< bimobius bimob r1 r2 of

Just ans -> bimobius (mob <>|| bimob) r1 r2 === Just ans

Nothing -> discardprop_bimob_o_leftMob ::

Bimobius -> Mobius -> Rational -> Rational -> Property

prop_bimob_o_leftMob bimob mob r1 r2 =

case (\x -> bimobius bimob x r2) =<< mobius mob r1 of

Just ans -> bimobius (bimob ||< mob) r1 r2 === Just ans

Nothing -> discardprop_bimob_o_rightMob ::

Bimobius -> Mobius -> Rational -> Rational -> Property

prop_bimob_o_rightMob bimob mob r1 r2 =

case (\y -> bimobius bimob r1 y) =<< mobius mob r2 of

Just ans -> bimobius (bimob ||> mob) r1 r2 === Just ans

Nothing -> discard

Now to the task at hand: implementing bimobius transformations on continued fractions.

cfBimobius :: Bimobius -> CFrac -> CFrac -> CFrac

cfBimobius (BM a b _ _ e f _ _) Inf y = cfMobius (Mobius a b e f) y

cfBimobius (BM a _ c _ e _ g _) x Inf = cfMobius (Mobius a c e g) x

cfBimobius (BM _ _ _ _ 0 0 0 0) _ _ = Inf

cfBimobius bm x y

| Just n <- bimobiusIntPart bm =

let bm' = Mobius 0 1 1 (- n) <>|| bm in n :+/ cfBimobius bm' x y

cfBimobius bm@(BM a b c d e f g h) x@(x0 :+/ x') y@(y0 :+/ y')

| g == 0 && h == 0 = consumeX

| h == 0 || h == 0 = consumeY

| abs (g * (h * b - f * d)) > abs (f * (h * c - g * d)) = consumeX

| otherwise = consumeY

where

consumeX = cfBimobius (bm ||< Mobius x0 1 1 0) x' y

consumeY = cfBimobius (bm ||> Mobius y0 1 1 0) x y'There are a few easy cases at the start. If either argument is infinite, then the infinite terms dominate, and the bimobius reduces to a unary mobius transformation of the remaining argument. On the other hand, if the denominator is zero, the result is infinite.

Next is the output case. This follows the same logic as the mobius transformations above:

But since m and m’ are now bimobius transformations, we just use one of the special composition forms defined earlier.

The remaining case is where we don’t have a new term to output, and must expand a term of the input, using the now-familiar equation, but composing with the ||< or ||> operators, instead.

There’s a new wrinkle here, though! Which of the two inputs should we expand? It seems best to make the choice that narrows the bounds the most, but I’ll be honest: I don’t actually understand the full logic behind this choice. I’ve simply copied it from Rosetta Code, where it’s not explained in any detail. We will rely on testing for confidence that it works.

Speaking of testing, we definitely need a few tests to ensure this works as intended. We’ll test the output against the Rational version of the function, and that the result is always in canonical form.

prop_cfBimobius_matches_Rational ::

NonNegative Rational ->

NonNegative Rational ->

Bimobius ->

Property

prop_cfBimobius_matches_Rational

(NonNegative r1)

(NonNegative r2)

bm =

case bimobius bm r1 r2 of

Just x

| x >= 0 ->

cfFromRational x

=== cfBimobius

bm

(cfFromRational r1)

(cfFromRational r2)

_ -> discardprop_cfBimobius_isCanonical ::

NonNegative Rational ->

NonNegative Rational ->

Bimobius ->

Bool

prop_cfBimobius_isCanonical

(NonNegative r1)

(NonNegative r2)

bm =

case bimobius bm r1 r2 of

Just x

| x >= 0 ->

isCanonical

(cfBimobius bm (cfFromRational r1) (cfFromRational r2))

_ -> discard

If you like, it’s interesting to compare the implementations of continued fraction arithmetic here with some other sources, such as:

- The Rosetta Code page mentioned above.

- Mark Dominus’ slides for his talk Arithmetic with Continued Fractions

These sources are great, but I think the code above is nicer. The reason is that with lazy evaluation instead of mutation, you can read the code more mathematically as a set of equations, and see what’s going on more clearly. This is quite different from the imperative implementations, which modify state in hidden places and require a lot of mental bookkeeping to keep track of it all. As I said, Haskell is a joy for this kind of programming.

Tying it all together

We now have all the tools we need to write Num and Fractional instances for CFrac. That’s a great way to wrap this all together, so that clients of this code don’t need to worry about mobius and bimobius transformations at all.

checkNonNegative :: CFrac -> CFrac

checkNonNegative Inf = Inf

checkNonNegative x@(x0 :+/ x')

| x < 0 = error "checkNonNegative: CFrac is negative"

| x > 0 = x

| otherwise = x0 :+/ checkNonNegative x'instance Num CFrac where

fromInteger n

| n >= 0 = n :+/ Inf

| otherwise = error "fromInteger: CFrac cannot be negative" (+) = cfBimobius (BM 0 1 1 0 0 0 0 1) x - y = checkNonNegative (cfBimobius (BM 0 1 (-1) 0 0 0 0 1) x y) (*) = cfBimobius (BM 1 0 0 0 0 0 0 1) signum (0 :+/ Inf) = 0

signum _ = 1 abs = id negate (0 :+/ Inf) = 0 :+/ Inf

negate _ = error "negate: CFrac cannot be negative"instance Fractional CFrac where

fromRational x

| x < 0 = error "fromRational: CFrac cannot be negative"

| otherwise = cfFromRational x recip = cfRecip (/) = cfBimobius (BM 0 1 0 0 0 0 1 0)

You can find the complete code and tests for this section at https://code.world/haskell#P_T4CkfWGu3bgSwFV0R04Sg. In addition to running the tests, I’ve included a few computations, demonstrating for example that:

Part 4: Going faster

Everything we’ve done so far is correct, but there’s one way that it’s not terribly satisfying. It can do a lot of unnecessary work. In this part, we’ll try to fix this performance bug.

You can find the code for this part in https://code.world/haskell#PP8uMTTchtH2F36nJYWT3VQ.

Consider, for example, a computation like x + 0.7. We know (and, indeed, Haskell also knows!) that 0.7 is a rational number. We should, then, be able to work this out by applying cfMobius to a simple mobius transformation.

But that’s not what GHC does. Instead, GHC reasons like this:

- We assume that

xis aCFrac. But+requires that both arguments have the same type, so0.7must also be aCFrac. - Since

0.7is a literal, GHC will implicitly add afromRational, applied to the rational form 7/10, which (using theFractionalinstance defined in the last part) usescfFromRationalto convert 0.7 into the continued

fraction0 :+/ 1 :+/ 2 :+/ 3 :+/ Inf. - Now, since we have two

CFracvalues, we must use the heavyweightcfBimobiusto compute the answer, choosing between the two streams at every step. In the process,cfBimobiusmust effectively undo the conversion of 7/10 into continued fraction form, doubling the unnecessary work.

Rewriting expressions

We can fix this with GHC’s rewrite rules. They can avoid this unnecessary conversion, and reduce constant factors at the same time, by rewriting expressions to use cfMobius instead of cfBimobius when one argument need not be a continued fraction. Similarly, when cfMobius is applied to a rational argument, it can be replaced by a simpler call to cfFromFrac after just evaluating the mobius transformation on the Rational directly. Integers are another special case: less expensive because their continued fraction representations are trivial, but but we might as well rewrite them, too.

{-# RULES

"cfrac/cfMobiusInt" forall a b c d n.

cfMobius (Mobius a b c d) (n :+/ Inf) =

cfFromFrac (a * n + b) (c * n + d)

"cfrac/cfMobiusRat" forall a b c d p q.

cfMobius (Mobius a b c d) (cfFromFrac p q) =

cfFromFrac (a * p + b * q) (c * p + d * q)

"cfrac/cfBimobiusInt1" forall a b c d e f g h n y.

cfBimobius (BM a b c d e f g h) (n :+/ Inf) y =

cfMobius (Mobius (a * n + c) (b * n + d) (e * n + g) (f * n + h)) y

"cfrac/cfBimobiusRat1" forall a b c d e f g h p q y.

cfBimobius (BM a b c d e f g h) (cfFromFrac p q) y =

cfMobius

( Mobius

(a * p + c * q)

(b * p + d * q)

(e * p + g * q)

(f * p + h * q)

)

y

"cfrac/cfBimobiusInt2" forall a b c d e f g h n x.

cfBimobius (BM a b c d e f g h) x (n :+/ Inf) =

cfMobius (Mobius (a * n + b) (c * n + d) (e * n + f) (g * n + h)) x

"cfrac/cfBimobiusRat2" forall a b c d e f g h p q x.

cfBimobius (BM a b c d e f g h) x (cfFromFrac p q) =

cfMobius

( Mobius

(a * p + b * q)

(c * p + d * q)

(e * p + f * q)

(g * p + h * q)

)

x

#-}There’s another opportunity for rewriting, and we’ve actually already used it! When mobius or bimobius transformations are composed together, we can do the composition in advance to produce a single transformation. Especially since these transformations usually have simple integer arguments that GHC knows how to optimize, this can unlock a lot of other arithmetic optimizations.

Fortunately, we’ve already defined and tested all of the composition operators we need here.

{-# RULES

"cfrac/mobius_o_mobius" forall m1 m2 x.

cfMobius m1 (cfMobius m2 x) =

cfMobius (m1 <> m2) x

"cfrac/mobius_o_bimobius" forall m bm x y.

cfMobius m (cfBimobius bm x y) =

cfBimobius (m <>|| bm) x y

"cfrac/bimobius_o_mobiusLeft" forall bm m x y.

cfBimobius bm (cfMobius m x) y =

cfBimobius (bm ||< m) x y

"cfrac/bimobius_o_mobiusRight" forall bm m x y.

cfBimobius bm x (cfMobius m y) =

cfBimobius (bm ||> m) x y

#-}Inlining, and not

This all looks great… but it doesn’t work. The problem is that GHC isn’t willing to inline the right things at the right times to get to exactly the form we need for these rewrite rules to fire. To fix this, I had to go back through all of the previous code, being careful to annotate many of the key functions with {-# INLINE ... #-} pragmas.

There are two forms of this pragma. When a trivial function might just get in the way of a rewrite rule, it’s annotated with a simple INLINE pragma so that GHC can inline and hopefully eliminate the function.

But when a function actually appears on the left hand side of a rewrite rule, though, we need to step more carefully. Inlining that function can prevent the rewrite rule from firing. We could use NOINLINE for this, but I’ve instead picked the form of inline that includes a phase number. The phase number prevents inlining from occurring prior to that phase. It looks something like:

{-# INLINE [2] cfRecip #-}Choosing a phase number seems to be something of a black art. I picked 2, and it worked, so… maybe try that one?

All these edits mean we have completely new versions of all of the code, and it’s in these files:

- Part 1: https://code.world/haskell#PKVqY8mch2YP__zZG3KJcaQ

- Part 2: https://code.world/haskell#PnYywOggsg_WaDdNXIpiHkg

- Part 3: https://code.world/haskell#PEKM_o0o1WbKnKhjUIjQ_hQ

- Part 4: https://code.world/haskell#PP8uMTTchtH2F36nJYWT3VQ

Done… but how do we know it worked?

Verifying the rewrites

Rewrite rules are a bit of a black art, and it’s nice to at least get some confirmation that they are working. GHC can tell us what’s going on if we pass some flags. Here are some useful ones:

-ddump-rule-rewriteswill print out a description of every rewrite rule that fires, including the before-and-after code.-ddump-simplwill print out the final Core, which is GHC’s Haskell-like intermediate language, after the simplifier (which applies these rules) is done. Adding-dsuppress-allmakes the result more readable by hiding metadata we don’t care about.

In CodeWorld, we can pass options to GHC by adding an OPTIONS_GHC pragma to the source code. If you looked at the Part 4 link above, you saw that I’ve done so. The output is long! But there are a few things we can notice.

First, we named our rules, so we can search for the rules by name to see if they have fired. They do! We see this:

...Rule fired

Rule: cfrac/cfBimobiusInt1

Module: (CFracPart4)...Rule fired

Rule: timesInteger

Module: (BUILTIN)

Before: GHC.Integer.Type.timesInteger ValArg 0 ValArg n_aocc

After: 0

Cont: Stop[RuleArgCtxt] GHC.Integer.Type.IntegerRule fired

Rule: plusInteger

Module: (BUILTIN)

Before: GHC.Integer.Type.plusInteger

ValArg src<GHC/In an imported module> 0 ValArg 1

After: 1...Rule fired

Rule: cfrac/bimobius_o_mobiusLeft

Module: (CFracPart4)...Rule fired

Rule: cfrac/cfBimobiusInt2

Module: (CFracPart4)

Good news: our rules are firing!

I highlighted the timesInteger and plusInteger rules, as well. This is GHC taking the result of our rules, which involve a bunch of expressions like a1 * a2 + b1 * c2, and working out the math at compile time because it already knows the arguments. This is great! We want that to happen.

But what about the result? For this, we turn to the -ddump-simpl output. It’s a bit harder to read, but after enough digging, we can find this (after some cleanup):

main4 = 1

main3 = 0

main2 = 2

main1

= $wunsafeTake

50# (cfToDecimal ($wcfMobius main4 main4 main3 main2 sqrt5))This is essentially the expression take 50 (cfDecimal (cfMobius (Mobius 1 1 0 2) sqrt5)). Recall that what we originally wrote was (1 + sqrt5) / 2. We have succeeded in turning an arithmetic expression of CFrac values into a simple mobius transformation at compile time!

Conclusion

We finally reach the end of our journey. There are still some loose ends, as there always are. For example:

- There’s still the thing with negative numbers, which I left as an exercise.

- Arithmetic hangs when computing with irrational numbers that produce rational results. For example, try computing

sqrt2 * sqrt2, and you’ll be staring at a blank screen while your CPU fan gets some exercise. This might seems like a simple bug, but it’s actually a fundamental limitation of our approach. An incorrect term arbitrarily far into the expression forsqrt2could change the integer part of the result, so any correct algorithm must read the entire continued fraction before producing an answer. But the entire fraction is infinite! You could try to fix this by special-casing the cyclic continued fractions, but you can never catch all the cases. - There’s probably plenty more low-hanging fruit for performance. I haven’t verified the rewrite rules in earnest, really. Something like Joachim Breitner’s inspection testing idea could bring more rigor to this. (Sadly, libraries for inspection testing require Template Haskell, so you cannot try it within CodeWorld.)

- Other representations for exact real arithmetic offer more advantages. David Lester, for example, has written a very concise exact real arithmetic library using Cauchy sequences with exponential convergence.

In the end, though, I hope you’ve found this part of the journey rewarding in its own right. We developed a non-trivial library in Haskell, derived the code using equational reasoning (even if it was a bit fuzzy), tested it with property testing, and optimized it with rewrite rules. All of these play very well with Haskell’s declarative programming style.